Have you ever needed to gather all the information from a web page? Here's how to write a tool in PowerShell that will do that for you.

Web scraping is the art of parsing an HTML web page and gathering up elements in a structured manner. Since an HTML page has a particular structure, it's possible to parse through this and to get a semi-structured output. I've intentionally used the word "semi" here because, if you begin playing with web scraping, you'll see that most web pages aren't necessarily well-formed. Even though the page doesn't adhere to "well-formed" standards, they will still appear as such in a browser. This is what most webmasters care about.

But, using a scripting language like PowerShell, a little ingenuity and some trial and error, it is possible to build a reliable web-scraping tool in PowerShell to pull down information from a lot of different web pages.

Note: Web pages can vary wildly in their structure and once even a tiny amount is changed, it can cause your web scraping tool to blow up. Focus on the basics for this tool and build more specific tools around particular web pages, if you're so inclined.

The command of choice

The command of choice is Invoke-WebRequest. This command should be a staple in your web scraping arsenal. It greatly simplifies pulling down web page data allowing you to focus your efforts on parsing out the data that you need therein.

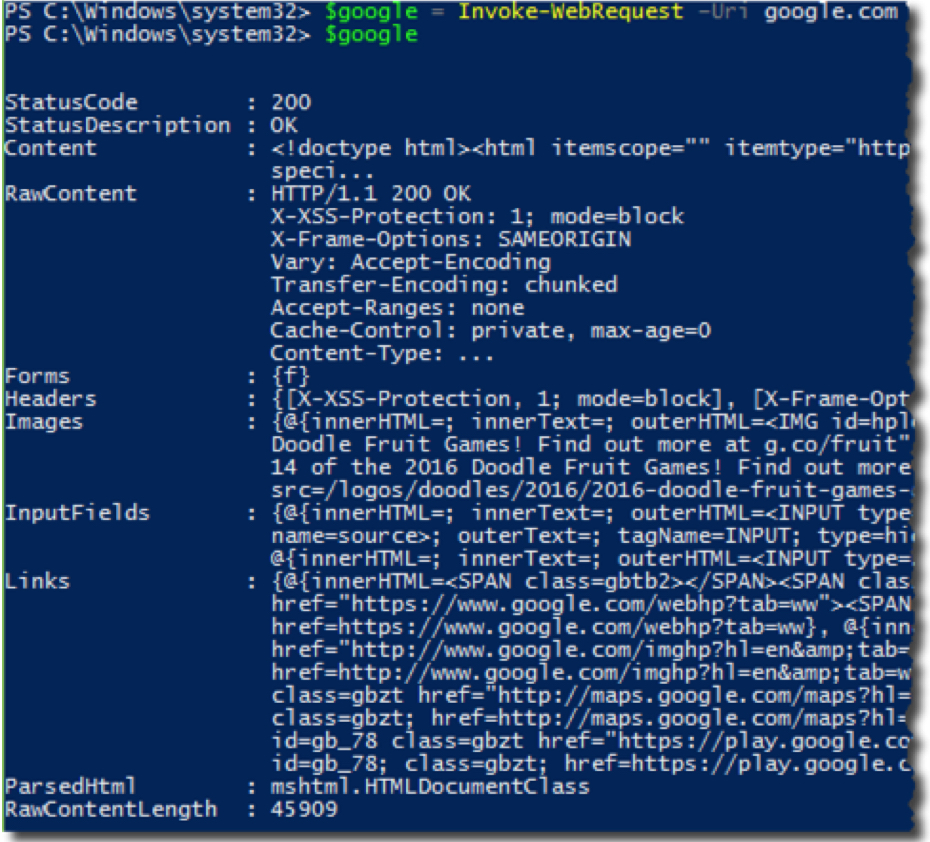

To get started, let's use a simple web page that everyone is familiar with; google.com and see how a web scraping tool sees it. To do this, I'll pass google.com to the Uri parameter of Invoke-WebRequest and inspect the output.

$google = Invoke-WebRequest –Uri google.com

This is a representation of the entire google.com page all wrapped up in an object for you. Let's see what we can pull from this web page. Perhaps I need to find all the links on the page. No problem. I simply need to reference the Links property.

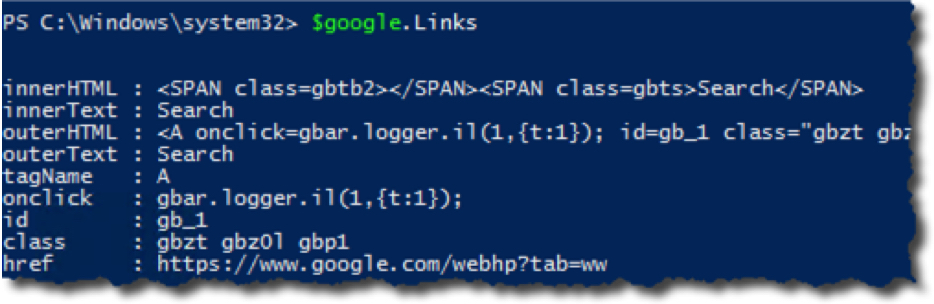

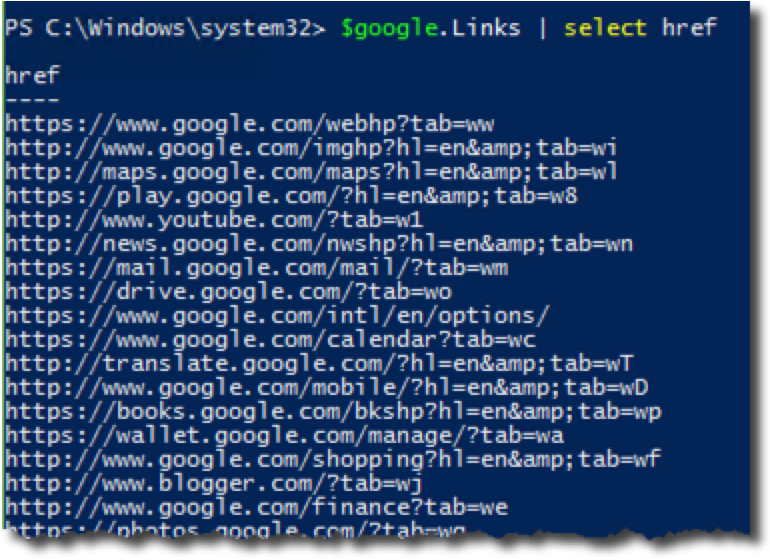

This will enumerate various properties about each link on the page. Perhaps I just want to see the URL that it links to.

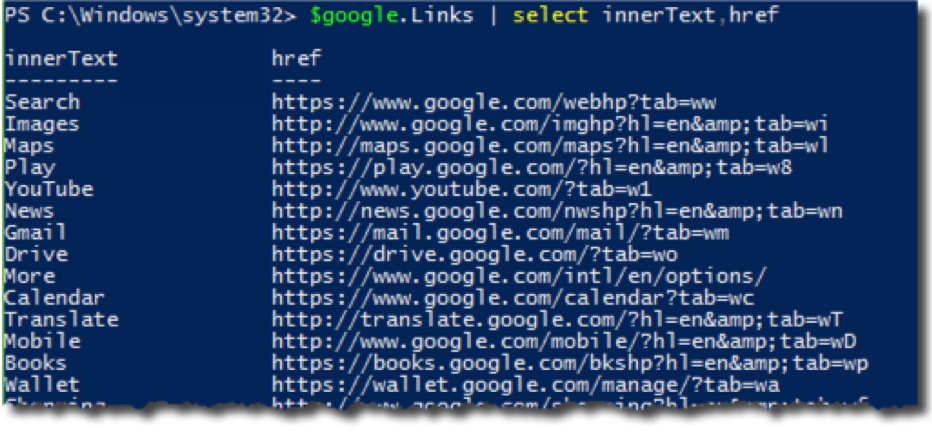

How about the anchor text and the URL? Since this is just an object, it's easy to pull information like this.

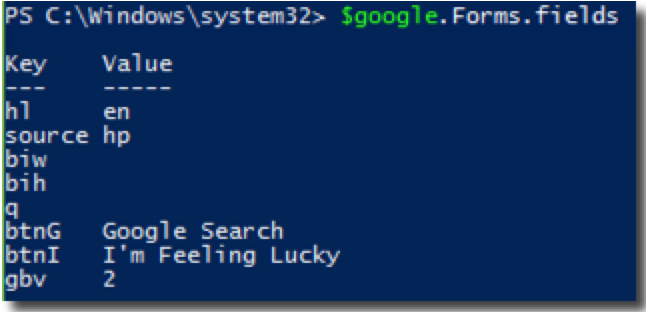

We can also see what the infamous Google.com form with the input box looks like under the hood.

Let's now take this another step and actually download some information from the web page. A common requirement is to download all images from a web page. Let's go over how to use PowerShell to enumerate all images on a page and download them to your local computer.

Gathering images

This time, we'll only get the basic information using the –UseBasicParsing parameter. This is quicker because Invoke-WebRequest doesn't crawl the DOM. It's almost necessary when querying massive web pages. For example, let's download all of the images on the cnn.com website.

$cnn = Invoke-WebRequest –Uri cnn.com –UseBasicParsing

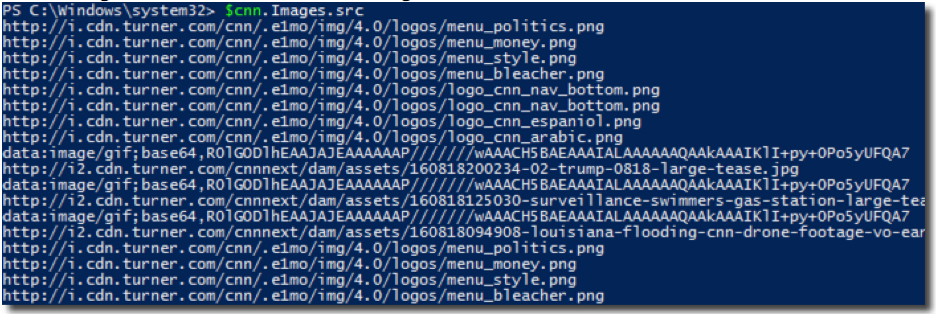

Now I'll figure out each URL that the image is hosted on.

Once we have the URLs, it's just a simple matter of using Invoke-Request again only, this time, we'll use the –OutFile parameter to send the response to a file.

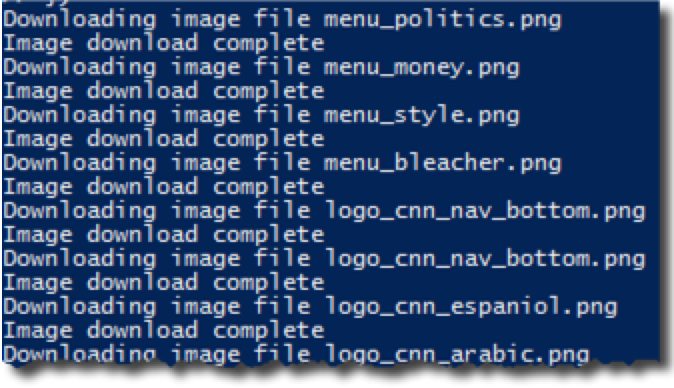

@($cnn.Images.src).foreach({

$fileName = $_ | Split-Path -Leaf

Write-Host "Downloading image file $fileName"

Invoke-WebRequest -Uri $_ -OutFile "C:$fileName"

Write-Host 'Image download complete'

})

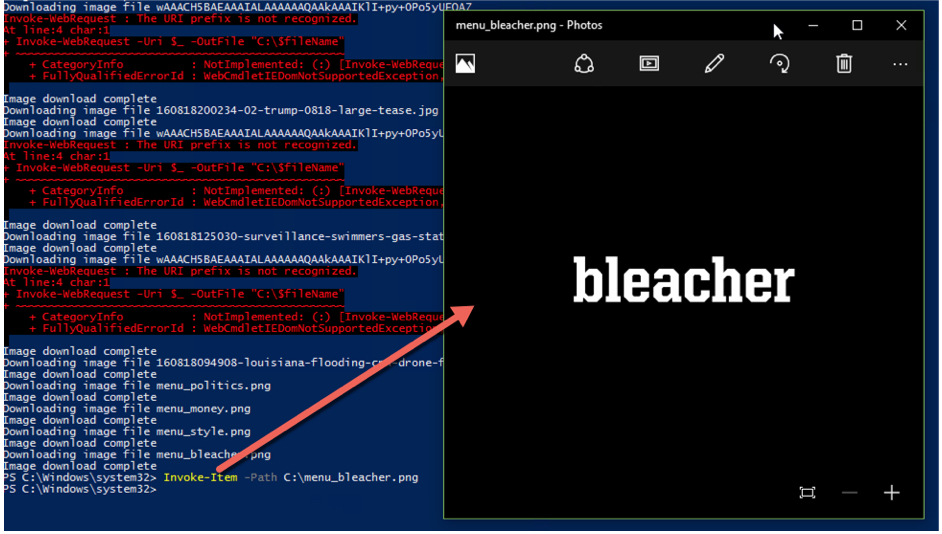

In this case, I saved the images directly to my C: but you can easily change this to whatever directory you'd like here. If you'd like to test the images directly from PowerShell, you can use the Invoke-Item command to pull up the image's associated viewer. You can see below that Invoke-WebRequest pulled down an image from cnn.com with the word "bleacher."

Use the code I went over today as a template for your own tool. Build a PowerShell function called Invoke-WebScrape, for example, with a few parameters like –Url or –Links. Once you have the basics down, you can easily create a customized tool to your liking that can be applied in many different places.